Solving like Sherlock: A 15 minute case with Observability

A story of how better Observability reduced our investigation to a mere 15 minutes.

Introduction

Today I've got a story of how Observability really saves time! Our Observability improvements enabled us to debug and remediate a production issue in a mere 15 minutes! Our on-call stumbled upon an issue where we were giving away free access to one of our premium products. Just like Sherlock, or maybe faster actually, we resolved the issue in record time. This was thanks to the improvements we've made to our Observability. Our application allows us to quickly test theories and get answers in real time! Just what we needed to crack the case!

The Problem

One of our engineers was on-call minding his own business and accidentally realized our application was giving out more access than it should have. Our system was giving access to a premium features called "Transaction Import" for free! This feature allows our business owners to connect their bank accounts and get transactions imported automatically into the Accounting product, and enjoy other niceties. Not quite the type of problem users would complain about, but definitely a business issue we wanted to rectify as quickly as possible. We needed to dig in, and understand what was happening and stop giving out free access.

Anyone can be a Sherlock

We've made some Observability improvements to our system to ensure that the barrier for debugging and investigating issues is simply "curiosity". Once you're curious enough and are prepared to ask questions, you're fully qualified to debug our system. With a little bit of context, you're aptly positioned to be just like Sherlock. Or so we hope! That's the grand goal of our Observability improvements!

With this ideal in mind, we aggressively instrument all our system paths. For any request that's being processed in our system, we capture lots of breadcrumbs! As the request makes its way down our architecture layers, we add a lot of data to illuminate our system state and explain its choices. If a function is being called, we know what the inputs and outputs are. If we're looping, our telemetry captures the information from each iteration. If we're processing a conditional, the data we capture makes it obvious what choice the system made. If we're interacting with a third-party, we capture all the artifacts of that interaction. Our telemetry is populated with the goal of demystifying any weird state the system got into... quickly!

With all this telemetry at our disposal, we can really lean into this idea of being Sherlock. In DataDog, we can look at traces and gain deep insights into the system behaviour. In SumoLogic, we're able to write advanced queries to test the most bizarre or novel ideas. In Sentry, outside of your usual stack trace and variables, we have tags generated from telemetry. This means more powerful searches, grouping and analysis. All in all, our rich telemetry accelerates debugging with our tools.

What happened?

Rewind for context...

We recently monetized some of our core features and our system is responsible for regulating and providing access to premium features. When a new user signs up and creates a business, our system determines the access the business should have. Once the determination is made, our system puts the business on a plan. Each plan gives the business access to a set of features (or entitlements) via a group (entitlements group). So, once the business is created, we add the business to a plan, then add them to the entitlement group specified by the plan. After our system provides access, other products use this information to provide or withhold access.

After we monetized, we've consistently put businesses on one plan, but we recently introduced another plan to be used later. We have a plan called STARTER (you'll want to remember this name) that's assigned to new businesses on creation. This plan links to an entitlement group of the same name which provides access to our free features. We recently added a new plan called STARTER_LEGACY. The STARTER_LEGACY plan's entitlement group provides the same features as STARTER, but adds one new feature... Transaction Import! That's the same feature we're apparently giving away for free.

Now you're equipped with enough information to be curious and dangerous! If you've been following carefully, you're probably wondering if we assigned the wrong plan, or... never mind... I don't want to spoil the punch line.

Start the timer! ⏰

0 seconds - On-call discovery

So recall, our On-Call bumps into this issue and spends some time explaining what he saw.

2 minutes - context loading...

We know there's an API called InitializeBusiness that's responsible for assigning plans and entitlement groups after business creation. Let's pull up a trace in DataDog. Looking at the trace we can see there's a service method called EntitlementsService.initialize_business that does the actual initialization. Blah. What's even better, is we can pose lots of questions about what that method is doing, in real time!

4 minutes... theories loading...

The first question we pondered was whether we were assigning the wrong plan when businesses were being created. Fancy you ask! Good thing we can look at each EntitlementsService.initialize_business call and see what plan it's assigning for each business. Better yet, we can zoom out and see what the application is doing for all requests in a given period.

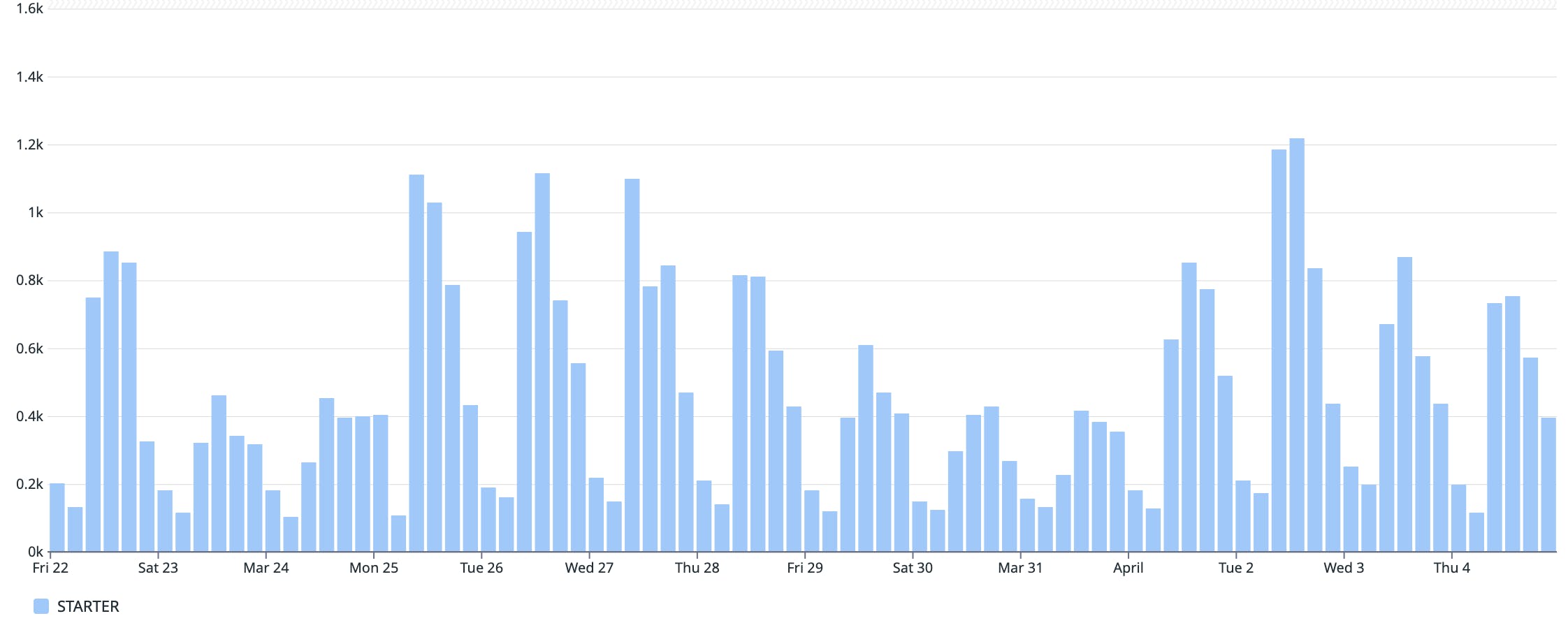

So... are we assigning the wrong plan? Below I'm looking at all our initialize_business call and grouping by the plan we're assigning.

Nope... that's not it!

Hmmm... so we're assigning the right plan. Every new business is still being put on the STARTER plan, not the new STARTER_LEGACY plan.

6 minutes... need another theory

Next, we took a look at all the data that we had on the span from the initialize_business call. Recall we're capturing lots of data points as the request moves down the stack. I saw something like this on our span in DataDog:

{

"application": "entitlements",

"business_id": "ecabb9dd-8fd5-4bad-8d04-a7607e807993",

"country": "CA",

"current_entitlements": [..., "TRANSACTION_IMPORT"],

"entrypoint": "kafka",

"plan": "STARTER",

"plan_entitlements": [..., "TRANSACTION_IMPORT"],

"plan_entitlement_group": "STARTER_FROM_LEGACY",

"plan_id": "987aa064-129b-40e6-aa1e-133985a3c51e",

"user_id": "f0982ea0-78b0-412a-a17a-14825d74e1c2",

"version": "7b96e6d-1b29222",

...

}

Running down that list, things we could confirm some of the behaviour we saw. The current_entitlements showed that it included TRANSACTION_IMPORT and the plan_entitlements also included TRANSACTION_IMPORT. So we're definitely giving access to the feature. We can also see that the plan is STARTER, so we're indeed assigning the correct plan.

Oh, wait! Something's not right! Did you see it?????? The plan is STARTER but the plan_entitlement_group is STARTER_LEGACY! That's not supposed to happen. Somehow, we're assigning the right plan, but the entitlement group on the plan is incorrect.

Can we confirm that?

Yes we can! We can graph that too! We can graph the plan_entitlement_group assigned across all of our initialize_business calls. And would you look at that? Looks like we've doing this for a little while. It dates back to about the ~22nd of the month.

What did we do on the 22nd? Asking around quickly we discovered we ran a script to introduce the new STARTER_FROM_LEGACY plan.

Jumping to the script, we quickly realized a logical error in the code. The script when run had overridden the entitlement group on the STARTER plan.

12 minutes... can we fix it?

Well first... let's stop the bleeding! Let's stop giving access that our customers shouldn't have. We don't want to be giving premium features for free. Digging in we realize we're able to fix it in the database, so that we did! We whipped up a quick query, executed it, then looked at our graphs again to confirm it was fixed.

Yuppp! No more free TRANSACTION_IMPORT feature. Well... we still need to fix the actual bug in our logic... but for now...

Stop the clock ⏰ it's 15 minutes!

Conclusion... after 15 minutes

When applied properly, Observability graduates from a mere buzzword status to offer real-time savings. It not only cuts down the time required for investigation but also speeds up remediation processes. In the example we discussed today, our ability to swiftly pinpoint the problem was a direct result of the enhancements we've incorporated to ensure our system supports extensive interrogability. The readily available context enabled us to quickly formulate and test hypotheses. Moreover, once the issue was resolved, we were able to confirm that our solution functioned as anticipated.

I really hope Sherlock is proud, or at least jealous. 😆

Have you got a story for how Observability cut your discovery/remediation time? I'd love to hear it!